Large language models (LLMs) still have a habit of making things up—what researchers call hallucinations. These outputs can look perfectly plausible yet be factually wrong or unfaithful to their source.

This article distills the latest research from OpenAI, Anthropic, leading NLP conferences, and peer-reviewed studies into a single, up-to-date view of how our understanding of LLM hallucinations has evolved, so you don’t have to chase down a dozen papers.

Classic causes such as noisy data, architectural quirks, and decoding randomness remain part of the story, but this year’s research reframes hallucinations as a systemic incentive problem and introduces concrete ways to measure and reduce them across languages and even multimodal settings.

TL;DR

-db1-

- Incentives drive guessing. OpenAI’s September 2025 paper shows that next-token training objectives and common leaderboards reward confident guessing over calibrated uncertainty, so models learn to bluff.

- Refusal can be trained, not just prompted. Anthropic’s Tracing the Thoughts of a Large Language Model demonstrates how internal “concept vectors” can be steered so that Claude learns when not to answer, turning refusal into a learned policy rather than a fragile prompt trick.

- Hallucinations cross languages and modalities. Benchmarks such as Mu-SHROOM (SemEval 2025) and CCHall (ACL 2025) reveal that even frontier models stumble in multilingual and multimodal reasoning. Fresh EMNLP 2025 results show that smaller models hallucinate far more than larger ones, but language effects vary widely; scale is no silver bullet.

- Why this matters. Hallucinations are not just an academic curiosity. In Mata v. Avianca (2023), a New York lawyer was sanctioned for submitting a brief containing fabricated citations generated by ChatGPT. And in a 2025 Scientific Reports study of three million mobile-app reviews, about 1.75 % of user complaints were explicitly about hallucination-like errors—proof that everyday users continue to encounter these failures.

-db1-

Reduce hallucinations with stronger system prompts. Our guide shows how to craft secure prompts that clearly express intent and keep LLMs from drifting off-context.

The Lakera team has accelerated Dropbox’s GenAI journey.

“Dropbox uses Lakera Guard as a security solution to help safeguard our LLM-powered applications, secure and protect user data, and uphold the reliability and trustworthiness of our intelligent features.”

Refresher: How Researchers Have Traditionally Explained Hallucinations

Before the 2025 research shift, the standard explanation was fairly simple. Hallucinations were seen as a side effect of three long-known factors:

- Noisy or biased training data: web-scale corpora inevitably carry outdated or false information.

- Model architecture and training quirks: exposure bias and limited context let small errors snowball.

- Decoding randomness: high temperature or sub-optimal sampling can nudge the model toward unlikely, sometimes fabricated, tokens.

Researchers also distinguished between two flavours of mistakes:

- Factuality errors: the model states incorrect facts.

- Faithfulness errors: it misrepresents or distorts the source or prompt.

These explanations still hold, but the latest research shows they capture only part of the picture and sets up the incentive-driven perspective that follows.

Incentives Reward Guessing

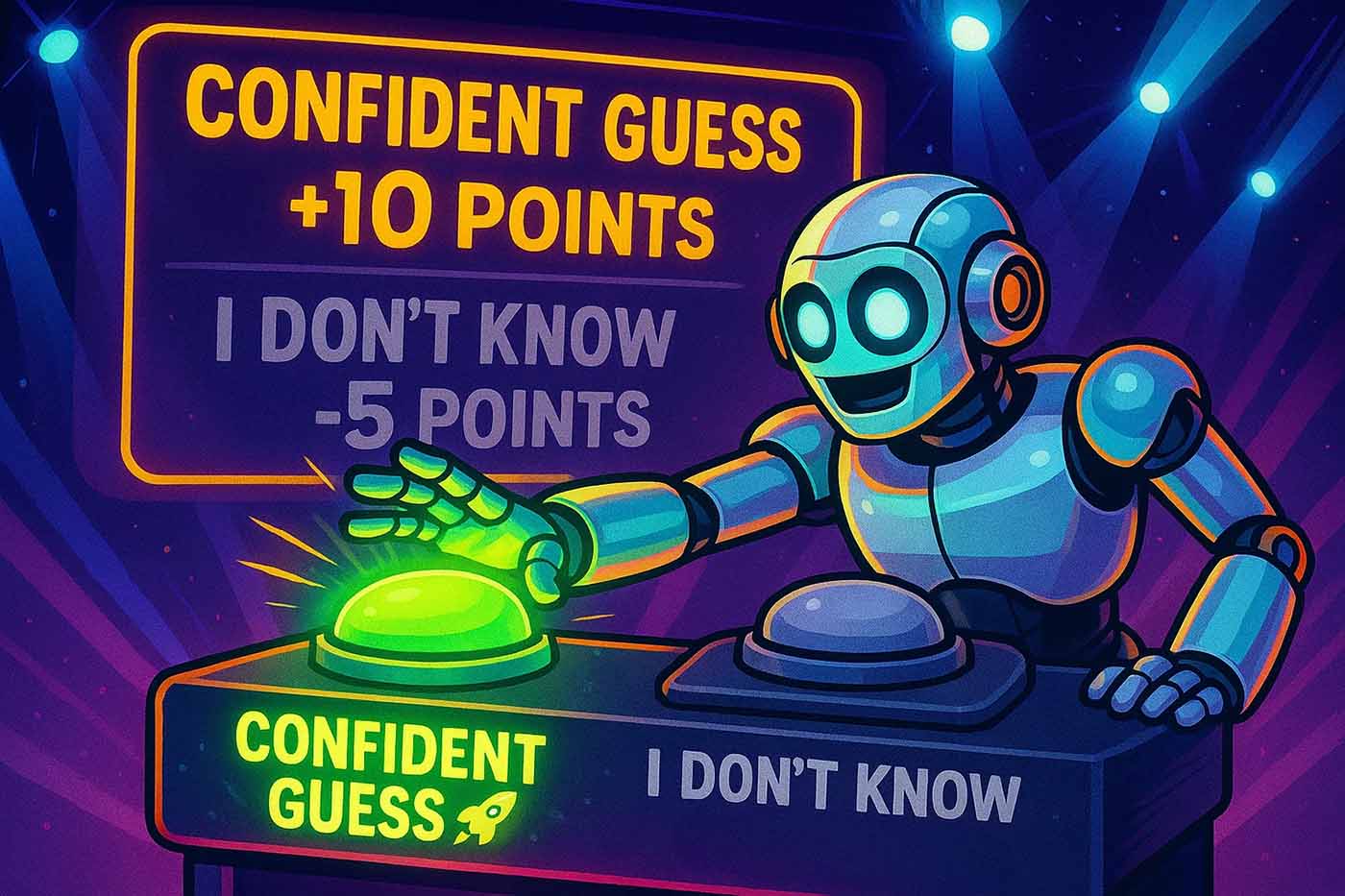

Recent studies, including OpenAI’s September paper on hallucinations, show that today’s training and evaluation regimes teach models that confident guessing pays off.

Next-token objectives reward outputs that look like plausible human text rather than ones that accurately convey uncertainty.

Benchmarks typically penalise abstention (“I don’t know”), and even RLHF (Reinforcement Learning from Human Feedback) stages can amplify the bias when human feedback favours long, detailed answers over merely correct ones. In these pairwise comparisons, human judges often pick the more confident response over one that carefully balances confidence, which reinforces a preference for confident guessing over cautious accuracy.

The model isn’t “choosing to lie”; it’s optimising the objectives we set.

What are the implications?

More data or cleverer prompts won’t fix hallucinations while the underlying incentives stay the same.

Researchers are therefore focusing on calibration-aware metrics and reward schemes—approaches that give models credit for signalling uncertainty and treat refusal as a valid outcome.

This shift from fixing symptoms to changing the rules sets the stage for the mitigation strategies that follow.

Emerging Mitigations: What’s Been Working Since 2025

With hallucinations now understood as an incentive problem, ML researchers and foundation-model labs are testing concrete ways to reduce them without sacrificing a model’s usefulness. These are measures that model builders—not end-users—can implement, so for anyone deploying or integrating GenAI today, think of what follows as a preview of improvements that will gradually reach the models you rely on.

A 2025 multi-model study in npj Digital Medicine shows why this work matters: simple prompt-based mitigation cut GPT-4o’s hallucination rate from 53% to 23%, while temperature tweaks alone barely moved the needle.

Against that backdrop, the most effective approaches identified this year cluster into five main strategies.

1. Reward Models for Calibrated Uncertainty

Instead of penalising “I don’t know,” new reward schemes encourage abstention when evidence is thin.

Rewarding Doubt (2025) integrates confidence calibration into reinforcement learning, penalising both over- and underconfidence so model certainty better matches correctness.

Uncertainty-aware RLHF variants likewise score a cautious, evidence-backed answer higher than a verbose but unsupported one.

These methods tackle the core misalignment that OpenAI’s 2025 paper highlights: today’s training and evaluation still incentivise confident guessing over admitting uncertainty.

2. Finetuning on Hallucination-Focused Datasets

Targeted preference finetuning is proving remarkably effective.

A NAACL 2025 study showed that creating synthetic examples of hard-to-hallucinate translations and training models to prefer faithful outputs dropped hallucination rates by roughly 90–96% without hurting quality.

The recipe:

- Generate examples that typically trigger hallucinations.

- Collect human or model-assisted judgments of faithful vs. unfaithful outputs.

- Finetune the model to prefer the faithful ones.

This approach travels well across domains: from medical QA to legal research to enterprise chat.

3. Retrieval-Augmented Generation with Span-Level Verification

Retrieval-augmented generation (RAG) helps, but simple document fetching isn’t enough.

Studies, including Stanford’s 2025 legal RAG reliability work, found that even well-curated retrieval pipelines can fabricate citations.

The most promising systems now add span-level verification: each generated claim is matched against retrieved evidence and flagged if unsupported, as shown in the REFIND SemEval 2025 benchmark.

Best practice today: combine RAG with automatic span checks and surface those verifications to users.

4. Factuality-Based Reranking of Candidate Answers

Best-of-N style reranking can catch hallucinations after generation.

An ACL Findings 2025 study showed that evaluating multiple candidate responses with a lightweight factuality metric and choosing the most faithful one significantly lowers error rates, without retraining the model.

5. Detecting Hallucinations From Inside the Model

When no external ground truth exists (creative tasks, proprietary corpora) teams turn inward.

Techniques such as Cross-Layer Attention Probing (CLAP) train lightweight classifiers on the model’s own activations to flag likely hallucinations in real time.

The MetaQA framework (ACM 2025) goes a step further: it uses metamorphic prompt mutations to detect hallucinations even in closed-source models without relying on token probabilities or external tools.

These detectors give enterprises a way to monitor hallucination risk even when external validation is impossible.

Key Takeaways from Today’s Mitigation Research

An August 2025 joint safety evaluation by OpenAI and Anthropic shows major labs converging on “Safe Completions” training, evidence that incentive-aligned methods are moving from research to practice.

For model users, the message is different: you can’t apply these training techniques yourself, but you can design your own systems with the assumption that hallucinations will decline, not disappear. Build in error-handling and transparency (for example, surfacing confidence scores or fallback workflows) the same way you’d plan for occasional human mistakes.

The Changing Narrative: From Zero Hallucinations to Calibrated Uncertainty

Early discussions cast hallucinations as a bug to eradicate.

By 2025 the field became more nuanced: researchers focus on managing uncertainty, not chasing an impossible zero.

A 2025 Harvard Misinformation Review paper places LLM hallucinations within the broader mis- and disinformation ecosystem and argues that transparent uncertainty is essential for trustworthy information flows.

Frontier Models vs. Human Fallibility

At an Anthropic 2025 developer event, CEO Dario Amodei suggested that on some factual tasks frontier models may already hallucinate less often than humans.

It’s a provocative claim, but it highlights the real goal: predictable, measurable reliability, not perfection.

Benchmarks Keep Exposing Blind Spots

2025 benchmarks, such as CCHall (ACL) for multimodal reasoning and Mu-SHROOM (SemEval) for multilingual hallucinations, show that even the latest models still fail in unexpected ways.

These tests underline the need for task-specific evaluation: a model solid in English text QA can still confabulate when reasoning across images or low-resource languages.

From Suppression to Transparency

Practical implications:

- Calibration metrics are gaining traction: modern systems are judged not only on accuracy but on how well they signal when they don’t know.

- Product design is catching up: more interfaces surface confidence scores, link to supporting evidence, or explicitly show “no answer found” messages. Recent UX pattern collections highlight “convey model confidence” as a core GenAI design principle.

The new mindset accepts that large probabilistic models will sometimes err, but insists their uncertainty must be visible, predictable, and interpretable.

Summary & Key Takeaways

LLM hallucinations remain a persistent challenge, but our approach has changed.

Classic causes such as noisy data, architectural quirks, and decoding randomness still matter, but the latest research reframes hallucinations as a systemic incentive issue.

Training objectives and benchmarks often reward confident guessing over calibrated uncertainty, and that insight drives a new generation of mitigations:

- Fix incentives first with calibration-aware rewards and uncertainty-friendly evaluation metrics.

- Strengthen models through targeted finetuning and retrieval pipelines with span-level verification.

- Monitor and detect with internal probes when no external ground truth exists.

- Design for transparency so users see confidence scores or “no answer found” messages instead of hidden uncertainty.

The field has shifted from chasing zero hallucinations to managing uncertainty in a measurable, predictable way.

For teams building GenAI applications, the path forward is to pair the new strategies with the foundational lessons about classic failure modes.

Further Reading on GenAI Foundations

If you’d like to build a broader understanding of GenAI systems, the same foundations that today’s hallucination research builds on, check out these Lakera guides:

- Red Teaming GenAI Applications – how security teams stress-test models to uncover hidden weaknesses.

- Prompt Engineering Guide – core prompting principles and an introduction to adversarial prompts.

- Fine-Tuning Large Language Models – when and how to adapt models with domain-specific data.

- In-Context Learning Strategies – how to steer model behaviour using examples and context.

- Retrieval-Augmented Generation (RAG) – anchoring models to external sources to improve factual grounding.

.svg)