The leading security platform to secure your AI future

Stay ahead of emerging threats while accelerating GenAI, agents, and MCPs for enterprise teams.

AI-native security

that scales with you

Lakera is trusted by industry leaders, from Fortune 500 companies to startups.

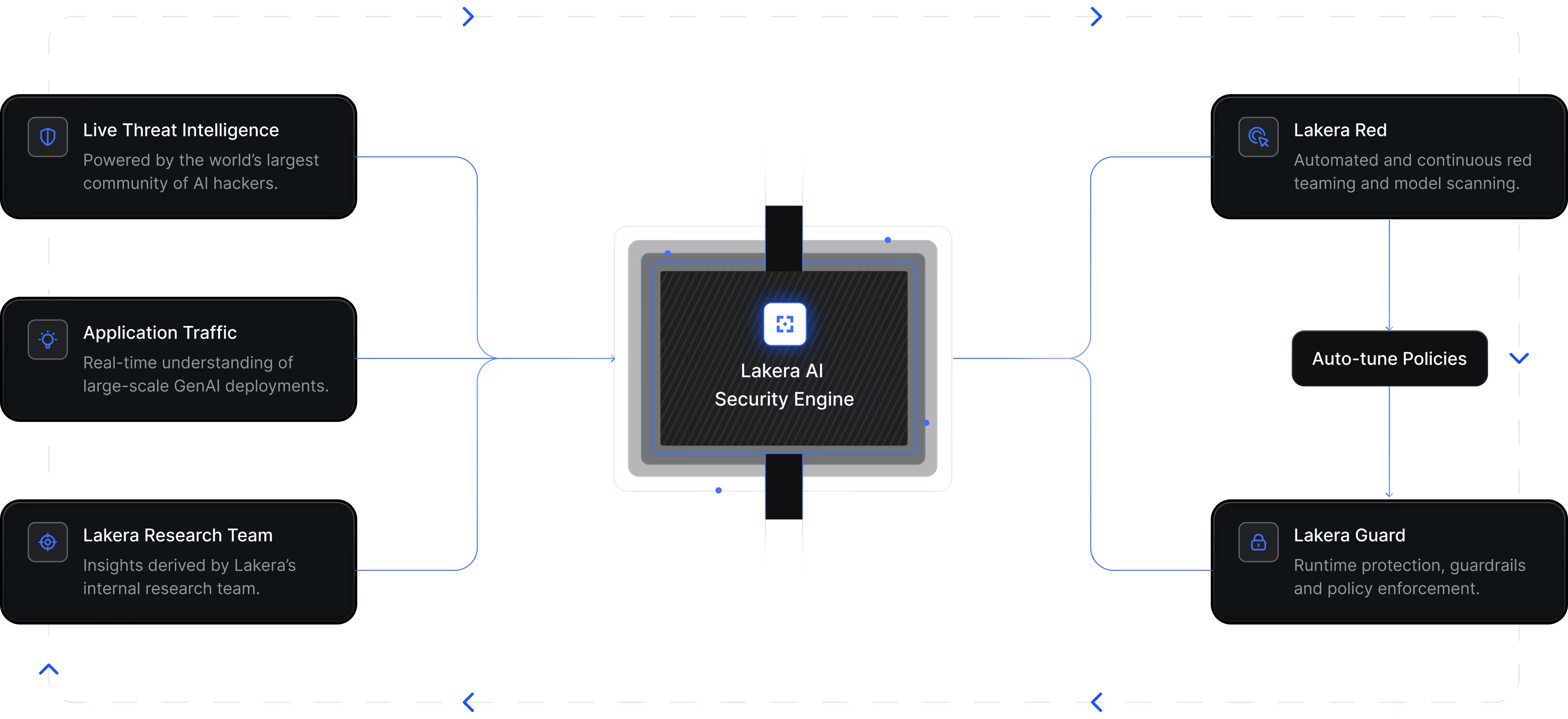

Lakera Guard

Runtime protection for AI applications

Real-time threat detection

Prompt attack prevention

Data leakage protection

Lakera Red

Risk-based GenAI Red Teaming

Risk-based vulnerability management

Collaborative remediation guidance

Direct and indirect attack simulations

Integration & Scale

Easy deployment and scaling

API-first architecture

Cloud-native deployment

Enterprise integrations

Continuous AI security powered by real threat data

Stronger AI security starts with understanding AI

Traditional security wasn’t built for GenAI.

Stop AI Attacks

Prevent prompt injections, data leakage, and jailbreaks before they impact your business.

Adapt in Real Time

Get protection that evolves with emerging GenAI threats without manual updates.

Secure Fast-Moving Teams

Protect your apps without slowing development or changing how your team builds.

Earn User Trust

Launch AI applications confidently with built-in security and compliance.

Security designed for AI

Continuously-evolving security

Worried that silent model updates change your security posture? Stay protected with dynamic, real-time security that adapts to evolving threats.

Industry-leading precision

Reduce risks by 3–4 orders of magnitude with Lakera’s unique context-aware approach.

Ultra-low latency

Deliver exceptional user experiences with minimal latency, even for very large prompts and context windows.

Central policy control

Customize policies to secure applications horizontally without changing code.

Multimodal and model agnostic

Secure chatbots and audio bots across any model, with support for expanding modalities.

Built for scale

Scale effortlessly from zero to hundreds of prompts per second with Lakera.

Outperforming all technical standards

Lakera is trusted by industry leaders, from Fortune 500 companies to startups, accelerating their GenAI journeys with ultra-low latency, operational performance, and unparalleled expertise.

“The Lakera team has accelerated our GenAI journey.”

"Dropbox uses Lakera Guard as our security solution to help safeguard our LLM-powered applications, secure and protect user data, and uphold the reliability and trustworthiness of our intelligent features."

“Lakera is always one step ahead in global support.”

1M+ secured transactions per app/day

100+ languages supported

0.01% production false positive rate

SaaS deployment

"We've chosen Lakera to secure our enterprise GenAI deployment across our regulated banking environment."

"This partnership enables us to safely innovate with AI in money transfers, financial services, and customer support. Lakera's accuracy, low latency, seamless integration, scalability and support for Portuguese and Spanish are essential for our global operations, especially in markets with sophisticated fraud attempts."

Recognized by industry leaders

Lakera’s approach to AI security is rooted in years of experience building AI that meets stringent aerospace security and safety requirements

Join the companies securing the Internet of Agents

Join the world's largest AI red team

Over a million users have played Gandalf to gain insights into securing AI. Gandalf continuously informs Lakera’s precise threat protection. Every novel exploit, instantly learned – so you’re never caught off-guard. And try our latest challenge, Gandalf: Agent Breaker, to see how attackers target AI agents.

Become a hacker80M+

Total Prompts

1M+

Total Players

30+ years

Total Time Spent Playing

Gandalf

Gandalf is the most popular cybersecurity game that educates people on AI security and threats. It has been used and enjoyed by millions of people and 1000s of organizations.

.svg)

%201.svg)